Reviewer Mentoring Program Survey

Rationale for the reviewer mentoring program survey

ACL-IJCNLP’21 will provide a mentoring program service to first-time reviewers. We are about to publish detailed information on this blog in the upcoming few days. In today’s blog post we want to share with the community a survey we conducted towards Senior Area Chairs and Area Chairs. During our mentoring program preparations, we asked SACs and ACs what they expect of reviews, including impact of code, appendices and data, the expected reviewing time per paper, what they would want to see in a reviewing tutorial video towards first-time reviewers, what they expect from the rebuttal phase.

Because some of these questions and answers are beyond the sole scope of the reviewer mentoring program, and probably of interest to all colleagues, we want to publicly share here the main results of that survey.

We first want to thank all 130 Senior Area Chairs and Area Chairs (24 SAC and 104 ACs) who took the time to answer this survey. A couple of questions were open questions and are not included in the statistical feedback here. We thank our SACs and ACs for this: many submitted many helpful comments in answer to the open questions “how to evaluate when there are enough experiments for leaning towards acceptance?” and “What should such a video tutorial(s) cover besides what is mentioned above? (which important aspect we missed?)”). Your input was carefully read, and it will influence how we proceed this year.

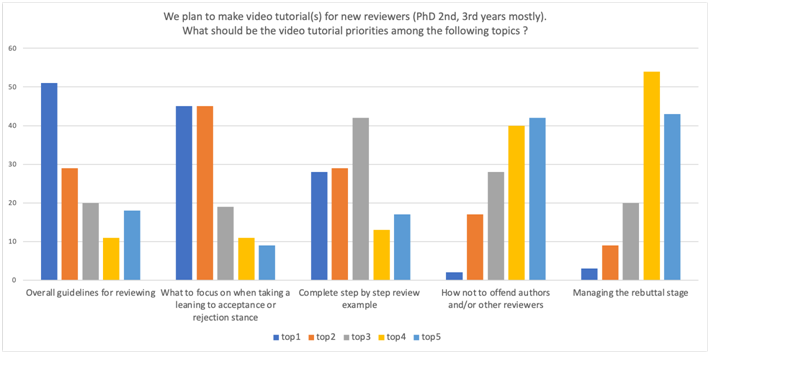

On multiple choice questions, we first asked SACs and ACs to rank some ideas we had in mind to be included in a general reviewing tutorial video for first-time reviewers. The question was as follows: “We plan to make video tutorial(s) for new reviewers (PhD 2nd, 3rd years mostly). What should be the video tutorial priorities among the following topics? Sort from most important (top) to least important (bottom).”

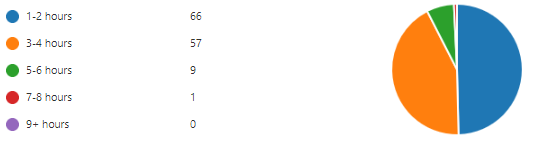

We then asked them how much time a reviewer should spend on average reviewing a short and a long paper respectively (If you are a first-time reviewer, please consider the upper bound obviously).

For short papers:

For long papers:

Then, we asked them how they feel about supplementary materials, mainly appendices, source code and data.

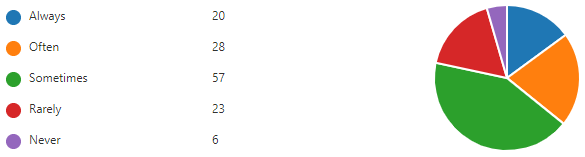

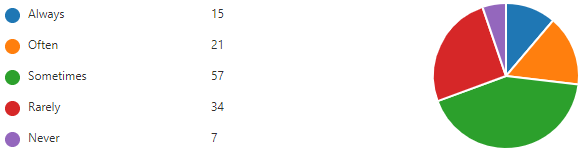

“Do you feel that reviewers for a conference like ACL/EMNLP/COLING should check *appendices*?”

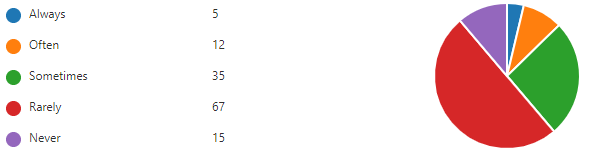

“Do you feel that reviewers for a conference like ACL/EMNLP/COLING should check *code*?”

“Do you feel that reviewers for a conference like ACL/EMNLP/COLING should check *data*?”

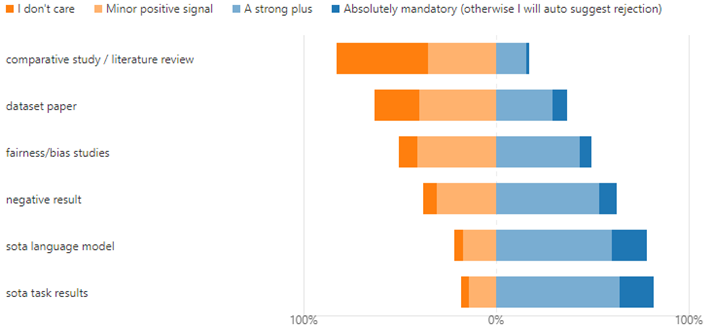

In the case of source code, we wanted to evaluate how important is the source code in making their decision as ACs and SACs, depending on the main paper categories we could think of. “When do you think the availability of the source code is needed for you to make a decision?”

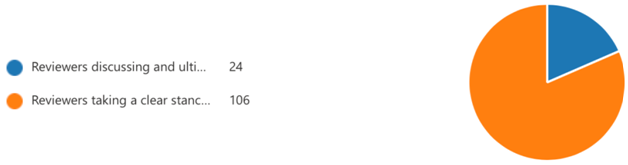

Next, a binary question shows that ACs and SACs prefer that the rebuttal allows reviewers to ultimately take a clear stance supported by arguments, rather than absolutely trying to reach a consensus. “What do you *mostly* expect at the rebuttal stage?

- Reviewers discussing and ultimately resolve any conflicts (I would rather avoid review scores discrepancies)

- Reviewers taking a clear stance and provide arguments (I don’t mind managing different scores as long as I have supporting arguments)

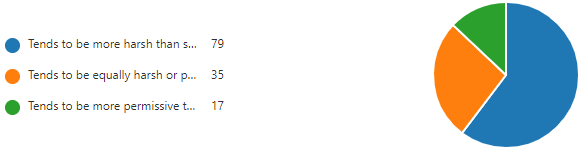

Finally, we asked them how they perceive the “harshness” of first-time reviewers on average, with respect to seasoned reviewers. This one should be taken with a grain of salt since SACs and ACs are the seasoned reviewers :-)

“From your experience, do first-time reviewers:

- Tends to be more harsh than seasoned reviewers

- Tends to be equally harsh or permissive than seasoned reviewers

- Tends to be more permissive than seasoned reviewers”

We hope that you found these statistics useful as well, as SACs, ACs, reviewers or authors!

Stay connected, we will provide the mentoring program description on the ACL-IJCNLP blog very soon.